Thoughts on Workflows for Teams

In Making Work Visible, Dominica DeGrandis defines the concept of Lead Time as the time between feature request and feature delivery. In a standard Kanban board, Lead Time is the time it takes for a card to move to the Done column, starting when it is added to the To Do column.

There are also component metrics to look at that break down Lead Time. The time it takes to move from To Do to Doing is called the Backlog Wait Time. And the time it takes to move from Doing to Done is the Cycle Time.

The goal for an organization concerned with feature delivery is to improve on two aspects of Lead Time: raw Lead Time and Lead Time Variability. Features need to ship as fast as possible and as predictably as possible. To make that happen, modern companies organize developers into small teams that integrate as many skillsets as possible. In a traditional organization, the UI/UX design team may need to submit a design to the front end engineering team to be implemented. Because these are two different teams with two different backlogs of work, that design may languish in the front end engineering team's To Do column, leaving the feature in a Wait State, extending the overall Lead Time. In that scenario, pairing up a UI/UX designer with a front end engineer then makes sense; it eliminates a wait state. If we extend that same train of logic, we end up with UI/UX, front end engineering, back end engineering, database, security, and infrastructure compentencies on a single team.

But that decomposition isn't always possible or desired. There are certain competencies that aren't always required to complete a feature: not every feature needs a full stack performance analysis or a new authentication integration. In a recent conference talk, even Google stated that their SRE team is largely siloed from developers. So there are many cases in which it doesn't make sense to have specialists scattered among the small teams. If we accept that some competencies will be siloed, how do we protect Lead Time and Lead Time Variability?

Backlog Management for A Siloed Team

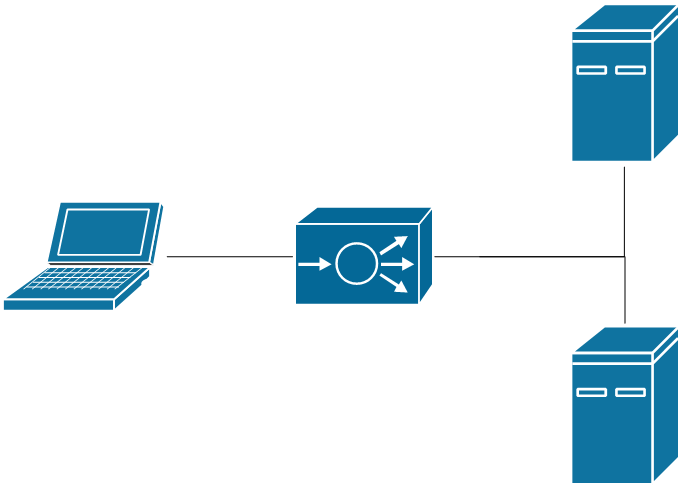

Network engineers spend a lot of time studying queueing. Routers need to make decisions about where to send packets and those decisions can take time. When the queue of packets waiting for a routing decision begins backing up, each packet has a longer wait time before it gets forwarded. This longer queue can overwhelm the router. It only has a limited amount of memory dedicated to packet queues, and when that queue is full, no more packets are even allowed to get in line (we call that a 100% drop rate). If that happens, then every computer and application that needs to get traffic through the router breaks down. Failure begins to cascade through the entire network.

So routers implement Quality of Service (QoS) and Active Queue Management (AQM) policies. That memory space dedicated to waiting packets is divided into serveral queues of different priorities. The network's QoS design decides what kind of traffic belongs in each queue. For example, VOIP traffic usually gets assigned to the highest priority queue. When VOIP packets begin backing up, phone conversations suddenly start stuttering due to an increase in the variability of packet arrival times, or jitter. AQM is implemented for each queue with the goal of preventing the queue from filling up. The most common algorithm to accomplish this is Random Early Detection (RED). RED defines two thresholds: kind of busy and really busy. When that queue is kind of busy, RED will begin dropping packets at a relatively low drop rate. When the queue becomes really busy, RED increases the drop rate. The logic behind this is that applications are able to send that packet again later (usually 5-20 milliseconds), so the actually service interruption is minimal. But the most important, time critical, data always gets through on time.

Source: https://network.jecool.net/

What can we learn from this example about that siloed team's To Do column? The longer the queue is, the longer any item will take to make it through the backlog. Think of this in the case of a backlog grooming meeting. If a team has to go through and re-prioritize 300 cards to decide what to work on next, what are the chances that some really important items will be lost in the shuffle? And how long will that backlog grooming meeting run over, impacting the time available to work active items? If a mechanism isn't in place to restrict queue length, lead time will increase due to increased backlog wait time AND due to increased cycle time.

Does that mean teams should start turning away work at random? Probably not. But team leads, product owners, and the like can begin telling requestors that "due to current obligations, the team is unlikely to be able to work on this request until Q4." These team leads can hold deferred requests in place that isn't visible to the rest of the team. They don't show up on the Kanban board's backlog, and thus don't take up team members' mental space unnecessarily.

Once we put conventions in place to protect the queue length, what can we do to reduce Cycle Time and Cycle Time Variability?

Decreasing Cycle Time for Siloed Teams

To protect Lead Time, it is necessary to reduce individual utilization. When someone isn't that busy, it's far easier for them to pick up an urgent work item. When someone isn't that busy, it's far easier to chase all the fine details of a particular request to completion, rather than just doing the essential work and deferring less urgent tasks like documentation (or maybe that's just me). We can make a large part of that utilization visible by enforcing Work In Progress (WIP) limits for individual and team workloads. And for the rest of that utilization, we need to examine overhead.

Application architecture has been moving towards microservices: autonomous, loosely-coupled bits of code that do one thing and one thing only. Because each functional component is isolated, the software environment is modular by design, making it really easy to maintain, update, or even replace one component of a large application without affecting too many dependencies. Before, an application server would contain a large amount of monolithic code to do several different things, interact with several different external services, and return several different pieces of data to an end user.

These monoliths were architected, in large part, because of overhead. The original servers were all physical machines. The overhead in rack space, power, and cooling meant that fewer, beefier servers made more sense than many smaller servers. There was too much overhead to justify running tiny amounts of code on a server. The monolithic design was more cost effective. When OS virtualization was created, it became possible to run several segregated virtual machines (VMs) on a single physical server. The design message going out to developers and system administrators became "only run a single significant process on each VM." Functional decomposition became limited by the overhead of replicated operating systems. Each VM still had to run a kernel, operating system, networking stack, and all the related processes that keep those things operating. Over the past decade, the industry has eliminated even more of the overhead by developing container technology. Containers only replicate software dependencies, sharing all of the OS overhead with tens or hundreds of other containers on a single system.

Right now, many knowledge workers have overhead comparable to an old server. There exists a constant barage of emails, instant messages (IMs), meetings, tracking spreadsheets to update, websites to log into, training to complete, and dozens of other small tasks that need to exist alongside meaningful work. DeGrandis points out that project managers struggle to effectively manage a project when they get interrupted with questions about project status updates several times a day. What opportunities exist to reduce administrative work that individuals and teams need to manage? How can managers treat their teams like a nervous sysadmin treats a problematic VM that "just needs one more agent installed on it, I promise?" Reducing or consolidating meeting attendance, email and IM presence, paperwork, training, and task and time tracking are all possible methods to reduce overhead, thus reducing overall utilization and focusing attention on meaningful work.

The challenge is that this is a culture shift that goes beyond just the company. Our modern workplace culture incentivizes busyness by default. Employees feel like they're doing something wrong when there isn't that much to do. Hearing stories about companies like Amazon modeling what 100% output within a position looks like and judging individuals against that standard reinforces that anxiety. In knowledge work settings, it's always easy to satisfy that anxiety by sending more emails, responding to more IMs, and attending more meetings.

But the cost of those interactions on real work is devastating. As told in Cal Newport's A World Without Email, the company RescueTime performed a study on users of its platform and found that half of its users checked email or IM an average of at least once every six minutes. In fact, they found that most of their users never went more than an hour without checking email or IM. So most RescueTime users are unable to go more than a full hour without their work being interrupted. And since it takes up to 20 minutes to turn full attention to a task, those small interruptions result in a significant blow to what any individual is able to accomplish. And the people who signed up for RescueTime are the ones who are mindful of how they spend their time! How do the rest of us stack up?

But wait! Meetings, emails, and IMs are how people communicate! How can someone get the information they need to complete some piece of work without these tools? It turns out that we can turn to the microservices architecture to offer a suggestion for this, too.

Creating consistent interfaces is key to reducing back-and-forth communication and clarification. The ubiquity of REST APIs means that every piece of software maintains application state client-side. Every piece of software supports providing required identifiers and inputs for every API call. CRUD functions do much of the same thing. A developer knows that creating create, rename, update, and delete methods for an object will satisfy every valid interaction with that object. A consistent interface with a functional team acts in a very similar way. If all required information is provided up front, less back and forth is required to accomplish a goal.

That is quite a tall order: how do you guarantee you can provide every since piece of necessary information up front. A lot of knowledge work is ambiguous, and things are always changing. I've found that some teams I interact with have solved the problem quite well. I can submit a request to them and consistently expect to have a meeting scheduled on my calendar soon after. In that meeting, all of the back and forth required to collect information, clarify intentions, and set requirements can happen synchronously. Asynchronous communication through email and IM is significantly reduced after that meeting.

Conclusion

If a company or organization is concerned with delivering features to customers, it needs to take a serious look at how individuals and teams manage their workloads. If Lead Time is what matters, then teams need to have the freedom to restrict backlog length to something managable. Teams need to have enough capacity so that each individual maintains a moderate utilization. The company culture needs to emphasize completeness over busyness. Teams need to consolidate or abstract overhead so that each individual can reduce the number of interruptions in the day. And teams need to define better interfaces between teams to help reduce those small interruptions.

The companies and teams that transition to this workflow successfully will see more advantages than just protecting Lead Time. Employees will have a lower incidence rate of burnout and they will feel more comfortable with their work/life balance. So turnover will reduce, leading to retained institutional knowledge. That retained institutional knowledge will help with technical debt reduction and bug squash efforts, providing a more stable platform over time.