Python Web Scraping to Detect PCI Errors

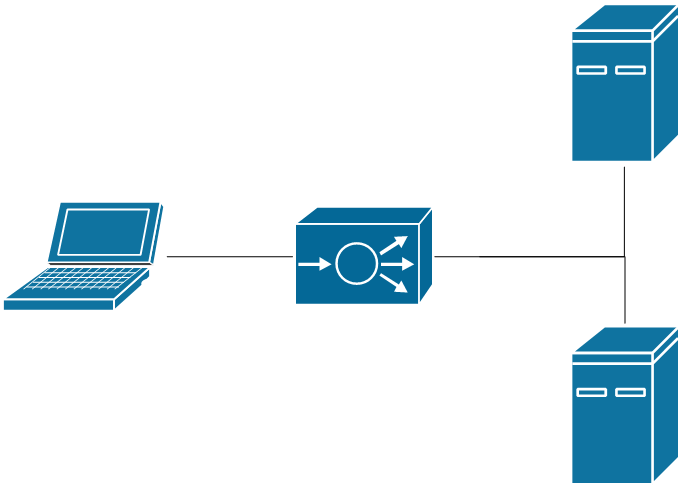

Right now, I am dealing with a little bit of instability within the packet capture environment at work. It turns out that when you completely overload one of these devices, it logs a PCI error and then stops responding to everything. It can be quite high effort to recover the device, requiring in at least one case a complete rebuild of the filesystem. The situation brings up imagery of a clogged toilet. And much like a clogged toilet, even clearing it out can still leave some issues down the line. I want to determine the true root cause.

The method to do that is to force a massive amount of traffic to one of the probes in our development environment, let it choke, and react quickly (within a day) to recover the probe, grab crash data, and send it to the vendor. Crash detection is most accurately performed by monitoring for a PCI error. These are visible in the IPMI console's event log (think ILO). However, I really don't want to take the couple minutes every day to log in and check it. So, let's automate it!

Python Web Scraping Options

- Requests - This is a core Python module that excels at HTTP interaction. I will likely be using this to perform the

GETs andPOSTs I need. - Beautiful Soup (BS4) - This module is built for decoding HTML. If the information I need is in a

<div>with a specific name, BS4 is the best way to get it. - Selenium - This is a bit of a long shot module. If the information is embedded in Java web applet or something that I can't interact with any other way, Selenium remotely controls a web browser. It's little annoying to work with for simpler web interaction, so I really want to avoid this.

Investigation to Determine Scraping Strategy

To determine which approach to take, I like to start by performing my normal browsing with the browser's developer mode turned on. As you can see below, this bit of navigation involved 80 HTTP requests/responses.

Sloppily redacted browser window

As I look through all this information, there are a number of things that jump out as very relevant. First, I see a POST to /cgi/login.cgi. Nothing about the headers looks all that important, but the Params tab shows that there's a form with username and password. So, authentication will require me to pass a username and password to /cgi/login.cgi.

Next, I need to see how the information I care about is being delivered. One of the many POSTs to /cgi/ipmi.cgi returns a bunch of XML with timestamps that match up with the event log table. However, the actual values aren't human readable.

<?xml version=\"1.0\"?>

<IPMI>

<SEL_INFO>

<SEL TOTAL_NUMBER=\"0011\"/>

<SEL TIME=\"2019/04/27 11:44:30\" SEL_RD=\"0100029e40c45c20000405516ff0ffff\" SENSOR_ID=\"Chassis Intru \" ERTYPE=\"6f\"/>

<SEL TIME=\"2019/06/12 19:01:38\" SEL_RD=\"020002124c015d33000413006fa58012\" SENSOR_ID=\"NO Sensor String\" ERTYPE=\"FF\"/>

<SEL TIME=\"2019/06/14 18:10:39\" SEL_RD=\"0300021fe3035d200004c8ff6fa0ffff\" SENSOR_ID=\"NO Sensor String\" ERTYPE=\"FF\"/>

<SEL TIME=\"2019/06/14 18:14:23\" SEL_RD=\"040002ffe3035d20000405516ff0ffff\" SENSOR_ID=\"Chassis Intru \" ERTYPE=\"6f\"/>

<SEL TIME=\"2019/06/14 19:20:16\" SEL_RD=\"05000270f3035d33000413006fa58012\" SENSOR_ID=\"NO Sensor String\" ERTYPE=\"FF\"/>

<SEL TIME=\"2019/06/17 18:30:51\" SEL_RD=\"0600025bdc075d200004c8ff6fa0ffff\" SENSOR_ID=\"NO Sensor String\" ERTYPE=\"FF\"/>

<SEL TIME=\"2019/06/17 18:34:34\" SEL_RD=\"0700023add075d20000405516ff0ffff\" SENSOR_ID=\"Chassis Intru \" ERTYPE=\"6f\"/>

<SEL TIME=\"2019/06/17 19:17:55\" SEL_RD=\"08000263e7075d33000413006fa58012\" SENSOR_ID=\"NO Sensor String\" ERTYPE=\"FF\"/>

<SEL TIME=\"2019/06/17 19:46:05\" SEL_RD=\"090002fded075d200004c8ff6fa0ffff\" SENSOR_ID=\"NO Sensor String\" ERTYPE=\"FF\"/>

<SEL TIME=\"2019/06/17 19:49:53\" SEL_RD=\"0a0002e1ee075d20000405516ff0ffff\" SENSOR_ID=\"Chassis Intru \" ERTYPE=\"6f\"/>

<SEL TIME=\"2019/06/18 13:04:42\" SEL_RD=\"0b00026ae1085d200004c8ff6fa0ffff\" SENSOR_ID=\"NO Sensor String\" ERTYPE=\"FF\"/>

<SEL TIME=\"2019/06/18 13:08:28\" SEL_RD=\"0c00024ce2085d20000405516ff0ffff\" SENSOR_ID=\"Chassis Intru \" ERTYPE=\"6f\"/>

<SEL TIME=\"2019/06/18 15:25:47\" SEL_RD=\"0d00027b02095d200004c8ff6fa0ffff\" SENSOR_ID=\"NO Sensor String\" ERTYPE=\"FF\"/>

<SEL TIME=\"2019/06/18 15:29:31\" SEL_RD=\"0e00025b03095d20000405516ff0ffff\" SENSOR_ID=\"Chassis Intru \" ERTYPE=\"6f\"/>

<SEL TIME=\"2019/06/18 17:59:54\" SEL_RD=\"0f00029a26095d200004c8ff6fa0ffff\" SENSOR_ID=\"NO Sensor String\" ERTYPE=\"FF\"/>

<SEL TIME=\"2019/06/18 18:03:41\" SEL_RD=\"1000027d27095d20000405516ff0ffff\" SENSOR_ID=\"Chassis Intru \" ERTYPE=\"6f\"/>

<SEL TIME=\"2019/06/18 20:38:09\" SEL_RD=\"110002b14b095d33000413006fa58012\" SENSOR_ID=\"NO Sensor String\" ERTYPE=\"FF\"/>

</SEL_INFO>

</IPMI>It's also important to note that the POST includes a form that requests:

SEL_INFO.XML : (1, c0)

So, now we know how to authenticate ourselves and how to get the data we need, but we don't yet have a way to interpret it. After more searching around, the HTML file returned from a GET to /cgi/url_redirect.cgi?url_name=servh_event includes a block of Javascript code that decodes that XML. While I probably could somehow run the XML through that Javascript code, I'm just going to understand how it works and recreate the parts I need.

Based on this investigation, it looks to me like I can get everything I need only using Requests. No need to involve those other modules.

Interpreting the XML Values

Through careful analysis of the Javascript code (and a lot of Ctrl-Fs), it looks like there are two really relevant pieces of code. The first is a switch on a value called tmp_sensor_type. Apparently, if this variable has the value 0x13 that means there's a PCI error. Also, I don't know who Linda is, but I appreciate that she made this whole thing possible.

case 0x13: //Linda added PCI error

//alert("PCI ERR message detected! value = "+ sel_traveler[10]);

var PCI_errtype = sel_traveler[10].substr(7,1);

PCI_errtype = "0x" + PCI_errtype;

var bus_id = sel_traveler[10].substr(8,2);

var fnc_id = sel_traveler[10].substr(10,2);

sensor_name_str = "Bus" + bus_id.toString(16).toUpperCase();

sensor_name_str += "(DevFn" + fnc_id.toString(16).toUpperCase() + ")";

if(PCI_errtype == 0x4)

tmp_str = "PCI PERR";

else if(PCI_errtype == 0x5)

tmp_str = "PCI SERR";

else if(PCI_errtype == 0x7)

tmp_str = "PCI-e Correctable Error";

else if(PCI_errtype == 0x8)

tmp_str = "PCI-e Non-Fatal Error";

else if(PCI_errtype == 0xa)

tmp_str = "PCI-e Fatal Error";

else

tmp_str = "PCI ERR";

break;The second relevant piece of code shows how tmp_sensor_type is determined. If it were in one discrete block, that would be nice. Instead, it's spread across a few hundred lines. Tracing through the variable assignments, I can see that:

tmp_sensor_type = sel_traveler[3];

I can also see:

sel_traveler = sel_buf[j];

It also appears that:

sel_buf[i-1][3] = stype;

And if I look at how we get stype, I find a line with an extremely helpful comment:

stype = parseInt(ch[5],16) ; \ take 11th byte

I don't know anything about Javascript, but I can find the 11th byte of something. And sure enough, if I go back to SEL_RD from my XML output, the 11th byte of all the PCI errors is 0x13.

Structure of the Python Script

Now that my investigation is done, I can identify what I need my Python script to do:

- Get the IPMI URL or IP address from the command line.

- Authenticate against /cgi/login.cgi.

POSTto /cgi/ipmi.cgi with the appropriate form.- Navigate the resulting XML to find my

SEL_RDvalues. - See if the 11th byte is

0x13. - Do something.

Implementation

Get the URL from the Command Line

This is pretty easy. It doesn't matter if there's a URL or IP address in the command line, and it's the only argument I'm taking.

import sys

url = sys.argv[1]

Authenticate against /cgi/login.cgi

I'm doing a couple noteworthy things here. First, I have my authentication details in a separate file called auth.py. Second, I'm using a Requests Session, which automatically keeps track of cookies and other session information for me.

import requests from auth import ipmi_username, ipmi_pwdauth_form = {'name': ipmi_username, 'pwd': ipmi_pwd}

ipmi_sesh = requests.Session() ipmi_sesh.post(f'http:///cgi/login.cgi', data=auth_form)

Request the Event Log Data

I already have my HTTP session set up, so I just need to ask for what I need.

event_log_form = {'SEL_INFO.XML': '(1,c0)'}

response = ipmi_sesh.post(f'http:///cgi/ipmi.cgi', data=event_log_form)

Get SEL_RD from the Returned XML

Python includes some resources that let you interpret XML relatively easily. Each element within the hierarchy is treated as a list.

import xml.etree.ElementTree as ET

ipmi_events = ET.fromstring(response.text) events = [] for SEL_INFO in ipmi_events: for SEL in SEL_INFO: events.append(SEL.attrib)

At this point, I have a list of dictionaries, where each dictionary includes the attributes of each SEL from the XML.

for event in events[1:] # first value is total number of events. We can skip it

print(event.get('SEL_RD'))I'm not actively printing SEL_RD in the final script, but that's how I isolate it.

Check the 11th Byte

Slicing byte arrays and strings in Python still doesn't come intuitively to me. It took some trial and error to find the [20:22].

if event.get('SEL_RD')[20:22] == '13':

# do something</code></pre><p><strong>Do Something</strong></p>

For this example, my 'do something' is just printing the timestamp, but we can record it in a log, send an email, or do whatever else we want.

print(f'Found PCI SERR at )

Conclusion

Python web scraping is surprisingly easy, and it would be a whole lot easier if I didn't have to learn to read Javascript to make my example work.

You can check out the full script on Github.